BTOES From Home - SPEAKER ...

Courtesy of Nintex Pty's Paul Hsu, below is a transcript of his speaking session on 'Improve employee productivity during and post-COVID by ...

Artificial intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. The term may also be applied to any machine that exhibits traits associated with a human mind such as learning and problem-solving.

The ideal characteristic of artificial intelligence is its ability to rationalize and take actions that have the best chance of achieving a specific goal.

Artificial intelligence makes it possible for machines to learn from experience, adjust to new inputs and perform human-like tasks. Most AI examples that you hear about today – from chess-playing computers to self-driving cars – rely heavily on deep learning and natural language processing. Using these technologies, computers can be trained to accomplish specific tasks by processing large amounts of data and recognizing patterns in the data.

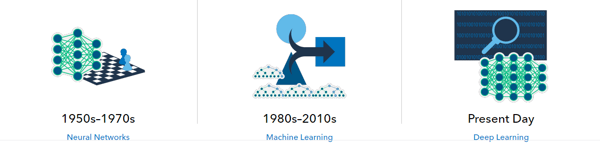

The term artificial intelligence was coined in 1956, but AI has become more popular today thanks to increased data volumes, advanced algorithms, and improvements in computing power and storage.

Early AI research in the 1950s explored topics like problem solving and symbolic methods. In the 1960s, the US Department of Defense took interest in this type of work and began training computers to mimic basic human reasoning. For example, the Defense Advanced Research Projects Agency (DARPA) completed street mapping projects in the 1970s. And DARPA produced intelligent personal assistants in 2003, long before Siri, Alexa or Cortana were household names.

This early work paved the way for the automation and formal reasoning that we see in computers today, including decision support systems and smart search systems that can be designed to complement and augment human abilities.

While Hollywood movies and science fiction novels depict AI as human-like robots that take over the world, the current evolution of AI technologies isn’t that scary – or quite that smart. Instead, AI has evolved to provide many specific benefits in every industry. Keep reading for modern examples of artificial intelligence in health care, retail and more.

When most people hear the term artificial intelligence, the first thing they usually think of is robots. That's because big-budget films and novels weave stories about human-like machines that wreak havoc on Earth. But nothing could be further from the truth.

Artificial intelligence is based on the principle that human intelligence can be defined in a way that a machine can easily mimic it and execute tasks, from the most simple to those that are even more complex. The goals of artificial intelligence include learning, reasoning, and perception.

As technology advances, previous benchmarks that defined artificial intelligence become outdated. For example, machines that calculate basic functions or recognize text through optimal character recognition are no longer considered to embody artificial intelligence, since this function is now taken for granted as an inherent computer function.

AI is continuously evolving to benefit many different industries. Machines are wired using a cross-disciplinary approach based in mathematics, computer science, linguistics, psychology, and more.

The applications for artificial intelligence are endless. The technology can be applied to many different sectors and industries. AI is being tested and used in the healthcare industry for dosing drugs and different treatment in patients, and for surgical procedures in the operating room.

Other examples of machines with artificial intelligence include computers that play chess and self-driving cars. Each of these machines must weigh the consequences of any action they take, as each action will impact the end result. In chess, the end result is winning the game. For self-driving cars, the computer system must account for all external data and compute it to act in a way that prevents a collision.

Artificial intelligence also has applications in the financial industry, where it is used to detect and flag activity in banking and finance such as unusual debit card usage and large account deposits—all of which help a bank's fraud department. Applications for AI are also being used to help streamline and make trading easier. This is done by making supply, demand, and pricing of securities easier to estimate.

Artificial intelligence can be divided into two different categories: weak and strong. Weak artificial intelligence embodies a system designed to carry out one particular job. Weak AI systems include video games such as the chess example from above and personal assistants such as Amazon's Alexa and Apple's Siri. You ask the assistant a question, it answers it for you.

Strong artificial intelligence systems are systems that carry on the tasks considered to be human-like. These tend to be more complex and complicated systems. They are programmed to handle situations in which they may be required to problem solve without having a person intervene. These kinds of systems can be found in applications like self-driving cars or in hospital operating rooms.

Since its beginning, artificial intelligence has come under scrutiny from scientists and the public alike. One common theme is the idea that machines will become so highly developed that humans will not be able to keep up and they will take off on their own, redesigning themselves at an exponential rate.

Another is that machines can hack into people's privacy and even be weaponized. Other arguments debate the ethics of artificial intelligence and whether intelligent systems such as robots should be treated with the same rights as humans.

Self-driving cars have been fairly controversial as their machines tend to be designed for the lowest possible risk and the least casualties. If presented with a scenario of colliding with one person or another at the same time, these cars would calculate the option that would cause the least amount of damage.

Another contentious issue many people have with artificial intelligence is how it may affect human employment. With many industries looking to automate certain jobs through the use of intelligent machinery, there is a concern that people would be pushed out of the workforce. Self-driving cars may remove the need for taxis and car-share programs, while manufacturers may easily replace human labor with machines, making people's skills more obsolete.

Every industry has a high demand for AI capabilities – especially question answering systems that can be used for legal assistance, patent searches, risk notification and medical research. Other uses of AI include:

AI applications can provide personalized medicine and X-ray readings. Personal health care assistants can act as life coaches, reminding you to take your pills, exercise or eat healthier.

AI provides virtual shopping capabilities that offer personalized recommendations and discuss purchase options with the consumer. Stock management and site layout technologies will also be improved with AI.

AI can analyze factory IoT data as it streams from connected equipment to forecast expected load and demand using recurrent networks, a specific type of deep learning network used with sequence data.

Artificial Intelligence enhances the speed, precision and effectiveness of human efforts. In financial institutions, AI techniques can be used to identify which transactions are likely to be fraudulent, adopt fast and accurate credit scoring, as well as automate manually intense data management tasks.

.jpg?width=500&name=download%20(1).jpg)

Artificial intelligence is going to change every industry, but we have to understand its limits.

The principle limitation of AI is that it learns from the data. There is no other way in which knowledge can be incorporated. That means any inaccuracies in the data will be reflected in the results. And any additional layers of prediction or analysis have to be added separately.

Today’s AI systems are trained to do a clearly defined task. The system that plays poker cannot play solitaire or chess. The system that detects fraud cannot drive a car or give you legal advice. In fact, an AI system that detects health care fraud cannot accurately detect tax fraud or warranty claims fraud.

In other words, these systems are very, very specialized. They are focused on a single task and are far from behaving like humans.

Likewise, self-learning systems are not autonomous systems. The imagined AI technologies that you see in movies and TV are still science fiction. But computers that can probe complex data to learn and perfect specific tasks are becoming quite common.

AI works by combining large amounts of data with fast, iterative processing and intelligent algorithms, allowing the software to learn automatically from patterns or features in the data. AI is a broad field of study that includes many theories, methods and technologies, as well as the following major subfields:

Additionally, several technologies enable and support AI:

In summary, the goal of AI is to provide software that can reason on input and explain on output. AI will provide human-like interactions with software and offer decision support for specific tasks, but it’s not a replacement for humans – and won’t be anytime soon.

View our schedule of industry leading free to attend virtual conferences.

Each a premier gathering of industry thought leaders and experts sharing key solutions to current challenges.

Watch On-Demand Recording - Access all sessions from progressive thought leaders free of charge

from our industry leading virtual conferences.

The premier Business Transformation & Operational Excellence Conference. Watch sessions on-demand for free.

Use code: BFH1120

Delivered by the industry's most progressive thought leaders from the world's top brands.

Start learning today!

Welcome to BTOES Insights, the content portal for Business Transformation & Operational Excellence opinions, reports & news.

Insights from the most progressive thought leaders delivered to your inbox.

Insights from the world's foremost thought leaders delivered to your inbox.

Being a hero is all about creating value for others. Please invite up to 5 people in your network to attend this premier virtual conference, and they will receive an invitation to attend.

If it’s easier for you, please enter your email address below, and click the button, and we will send you the invitation email that you can forward to relevant people in your network.

View our schedule of industry leading free to attend virtual conferences. Each a premier gathering of industry thought leaders and experts sharing key solutions to current challenges.

View Schedule of EventsWatch On-Demand Recording - Access all sessions from progressive thought leaders free of charge from our industry leading virtual conferences.

Watch On-Demand Recordings For FreeDelivered by the industry's most progressive thought leaders from the world's top brands. Start learning today!

View All Courses NowThe premier Business Transformation & Operational Excellence Conference. Watch sessions on-demand for free. Use code: BFH1120

Watch On-DemandInsights from the most progressive thought leaders delivered to your inbox.

Insights from the world's foremost thought leaders delivered to your inbox.

Being a hero is all about creating value for others. Please invite up to 5 people in your network to also access our newsletter. They will receive an invitation and an option to subscribe.

If it’s easier for you, please enter your email address below, and click the button, and we will send you the invitation email that you can forward to relevant people in your network.

Courtesy of Nintex Pty's Paul Hsu, below is a transcript of his speaking session on 'Improve employee productivity during and post-COVID by ...

Read this article about HP, Best Achievement in Operational Excellence to deliver Digital Transformation, selected by the independent judging panel, ...

Read this article about BMO Financial Group, one of our finalists, in the category Best Achievement in Operational Excellence to deliver Digital ...

Read this article about Cisco, one of our finalists, in the category Best Achievement of Operational Excellence in Internet, Education, Media & ...

50 Grosvenor Hill. London W1K 3QT.

United Kingdom.

Copyright 2023 Proqis®